Have you ever heard about ‘Black Box Deep‘ learning? It’s a term that might sound mysterious, but it really just means that deep learning models, like those used in AI, are hard to understand. When we talk about ‘Black Box Deep’ learning, we’re saying that it’s difficult to see what’s happening inside these models and how they make decisions.

This can be a problem because, without understanding the process, it’s hard to trust these models fully. Imagine using a machine that makes important decisions for you, but you have no idea how it works! That’s why learning more about ‘Black Box Deep’ models is so important, especially as AI becomes a bigger part of our lives.

1. What is ‘Black Box Deep’ Learning and Why Does It Matter?

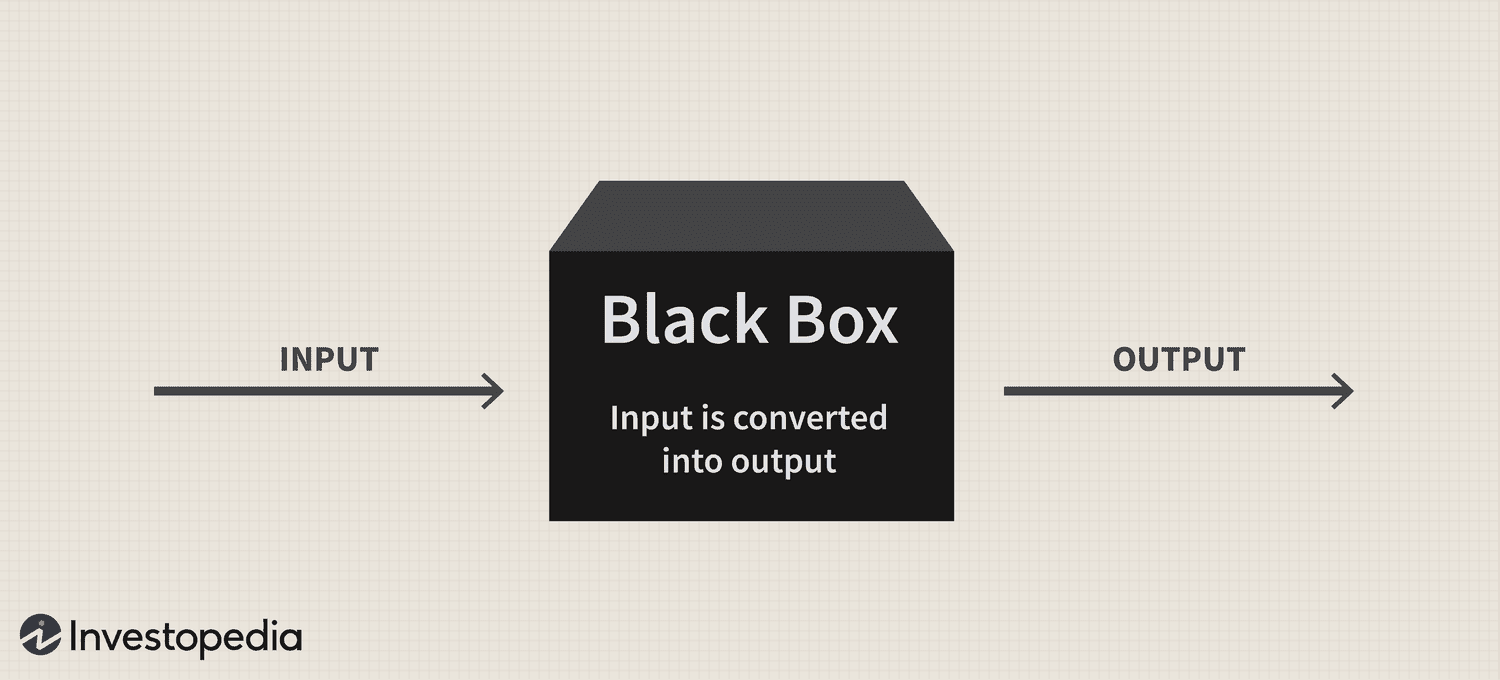

‘Black Box Deep’ learning is a type of artificial intelligence (AI) where the inner workings are hard to understand. It’s called “black box” because, like a sealed box, you can’t see what’s happening inside. You just know what goes in and what comes out. This is important because we use AI in many areas of life, from online shopping to medical diagnosis, and it’s crucial to know how these decisions are made.

In ‘Black Box Deep’ learning, the AI learns by itself using large amounts of data. It creates rules and patterns, but it doesn’t explain them to us. This can be confusing because even the people who build the AI sometimes don’t fully understand how it works. That’s why it’s called a black box—because what happens inside is hidden.

Understanding why ‘Black Box Deep’ learning matters is essential. If we don’t know how the AI makes decisions, it’s hard to trust it completely. This is especially true in areas like healthcare, where a wrong decision could have serious consequences. So, even though ‘Black Box Deep’ learning is powerful, we need to find ways to make it more transparent.

2. The Mystery Behind ‘Black Box Deep’ Models in AI

The mystery of ‘Black Box Deep’ models in AI lies in how they work. These models can take in a lot of information and make decisions that seem almost magical. But the problem is, we don’t always know how they arrive at these decisions. This is like trying to understand a magician’s trick without seeing the secret behind it.

When AI uses ‘Black Box Deep’ models, it processes information in layers. Each layer learns something new, but it doesn’t tell us what it learned. This can be confusing because we see the result but not the process. That’s why many people find ‘Black Box Deep’ learning both fascinating and frustrating.

The challenge with ‘Black Box Deep’ models is that they are not always easy to explain. When AI makes a mistake, it’s hard to figure out why. This can be a problem if the AI is making decisions in critical areas, like driving a car or diagnosing a disease. So, while ‘Black Box Deep’ models are impressive, they also come with their own set of challenges.

3. How ‘Black Box Deep’ Learning Works: A Simple Explanation

Understanding how ‘Black Box Deep’ learning works can be tricky, but let’s break it down. Imagine a machine that learns by itself. It looks at a lot of information, like pictures or words, and figures out patterns on its own. This is what happens in ‘Black Box Deep’ learning. The AI goes through layers of information and gets smarter with each layer.

Each layer in ‘Black Box Deep’ learning does something different. The first layer might look at simple things, like shapes or colors. The next layer might put these shapes together to see objects. But the tricky part is that the AI doesn’t tell us what it’s learning at each step. We only see the final answer, not how it got there.

In ‘Black Box Deep’ learning, the AI uses complex math to make decisions. This math is so complicated that even experts find it hard to understand. That’s why it’s called a black box—it’s not easy to see what’s going on inside. But even though it’s hard to understand, this kind of learning is very powerful and is used in many smart technologies today.

4. Why ‘Black Box Deep’ Models Are Hard to Understand

‘Black Box Deep’ models are hard to understand because they use complex methods to learn. These methods involve a lot of math and data processing, which can be difficult to follow. The AI doesn’t explain its steps, so we don’t know exactly how it makes its decisions. This lack of explanation is what makes these models a black box.

When we talk about ‘Black Box Deep’ models, we’re talking about systems that learn on their own. They don’t need detailed instructions; they figure things out by looking at patterns. But because they do this in such a complicated way, even the people who create them don’t always know how they work. This can be a problem if the model makes a mistake or if we need to understand its reasoning.

The reason ‘Black Box Deep’ models are hard to understand is that they process information in ways that aren’t clear. The AI might look at hundreds of details all at once, making connections that are hard for us to see. This can make it difficult to trust the model, especially in situations where understanding the process is important.

5. The Challenges of Trusting ‘Black Box Deep’ AI Systems

Trusting ‘Black Box Deep’ AI systems can be a challenge because we don’t always know how they work. If you can’t see how a decision is made, it’s hard to feel confident that it’s the right one. This is especially true in important areas like healthcare, finance, and safety, where mistakes can have big consequences.

One of the main challenges with ‘Black Box Deep’ AI systems is their complexity. These systems can analyze huge amounts of data and make decisions faster than any human. But because they are so complex, even experts can find it difficult to explain why a certain decision was made. This lack of transparency makes it harder to trust the system.

Another challenge is that ‘Black Box Deep’ AI systems can be unpredictable. Sometimes they make decisions that seem strange or incorrect, and without understanding how the system works, it’s hard to fix these mistakes. This can make people hesitant to rely on AI for critical tasks. Building trust in ‘Black Box Deep’ systems requires more transparency and better ways to explain how they work.

6. Can We Make ‘Black Box Deep’ Learning More Transparent?

Making ‘Black Box Deep’ learning more transparent is an important goal for many researchers. Transparency means being able to see and understand how decisions are made. In ‘Black Box Deep’ learning, this is difficult because the process is hidden. But there are ways to make it clearer, and researchers are working hard to find solutions.

One way to make ‘Black Box Deep’ learning more transparent is by developing tools that help explain how the AI makes decisions. These tools can show us what the AI is focusing on when it makes a choice. This can be helpful in understanding the process and making sure the decisions are fair and accurate.

Another approach is to simplify the models so that they are easier to understand. This might mean using fewer layers or less complicated math. By making ‘Black Box Deep’ learning more transparent, we can increase trust in AI systems and use them more confidently in everyday life. Even though it’s challenging, making these models easier to understand is a step in the right direction.

7. Understanding the Risks of ‘Black Box Deep’ Learning in Everyday Life

‘Black Box Deep’ learning has many benefits, but it also comes with risks. One of the biggest risks is not knowing how the AI makes decisions. In everyday life, we rely on AI for many things, like choosing what to watch online or even driving our cars. But if we don’t understand how these decisions are made, we could be putting ourselves at risk.

Another risk of ‘Black Box Deep’ learning is that it can make mistakes that are hard to detect. For example, if an AI misinterprets data, it might give the wrong answer. Because the process is hidden, we might not realize there’s a problem until it’s too late. This is why understanding and monitoring ‘Black Box Deep’ systems is so important.

In everyday life, the risks of ‘Black Box Deep’ learning can affect our safety, privacy, and trust. As AI becomes more common, it’s crucial to find ways to reduce these risks. By making ‘Black Box Deep’ learning more transparent, we can better protect ourselves and make sure these systems work in our best interests.

8. Why ‘Black Box Deep’ Models Need Better Interpretability

‘Black Box Deep’ models need better interpretability so we can understand how they work. Interpretability means being able to explain why a model made a certain decision. Without this, it’s hard to trust the model or fix it if something goes wrong. This is why improving interpretability is a key focus in AI research.

In ‘Black Box Deep’ models, the lack of interpretability is a big challenge. These models are powerful, but their complexity makes them hard to understand. Better interpretability would mean we could see the steps the AI took to reach its decision. This would help us catch mistakes, improve the model, and ensure it’s working correctly.

Improving interpretability in ‘Black Box Deep’ models would also make AI more accessible. If more people can understand how AI works, it could be used more widely and effectively. This is why many experts believe that making ‘Black Box Deep’ models easier to interpret is essential for the future of AI.

9. How to Approach ‘Black Box Deep’ Learning Safely

Approaching ‘Black Box Deep’ learning safely means understanding its limits and risks. Because these models are complex, we need to be careful about how we use them. This is especially important in areas where mistakes can be costly, like medicine or finance. To use ‘Black Box Deep’ learning safely, it’s important to have safeguards in place.

One way to approach ‘Black Box Deep’ learning safely is by using tools that help explain the model’s decisions. These tools can provide insights into how the AI is working and whether it’s making good choices. This can help build trust in the system and make it easier to catch errors before they cause problems.

Another important step is to continuously monitor ‘Black Box Deep’ models. By keeping an eye on how the model is performing, we can spot any issues early on. This can prevent mistakes and ensure that the AI is doing its job correctly. Approaching ‘Black Box Deep’ learning with caution and care

13. The Role of Data in ‘Black Box Deep’ Learning Models

In ‘Black Box Deep’ learning models, data plays a crucial role. The AI uses data to learn and make decisions, but it’s often unclear how this data affects the outcomes. The data can be anything from images and text to numbers and sounds. Understanding the role of data in these models helps us get a clearer picture of how the AI works.

When a ‘Black Box Deep’ learning model is trained, it processes large amounts of data to find patterns and make decisions. However, we don’t always know which parts of the data are most important. This lack of clarity can make it difficult to understand how the AI reached its conclusions.

To make ‘Black Box Deep’ models more transparent, researchers are looking at ways to analyze the data used by the AI. By understanding how different types of data influence the model’s decisions, we can gain better insights into its behavior. This can help improve the model and make its decisions more reliable.

14. How ‘Black Box Deep’ Models Impact Decision-Making

‘Black Box Deep’ models impact decision-making in many areas, from finance to healthcare. These models can make predictions and decisions that affect people’s lives. However, because the decision-making process is hidden, it can be challenging to understand how the AI arrived at its conclusions.

In finance, ‘Black Box Deep’ models might be used to predict stock prices or assess credit risk. If the AI makes a decision based on hidden factors, it can be difficult for users to understand or trust that decision. This lack of transparency can lead to concerns about fairness and accuracy.

In healthcare, ‘Black Box Deep’ models might help diagnose diseases or recommend treatments. Understanding how these models make decisions is crucial because it can impact patient care. If the decision-making process is not clear, it can be hard to ensure that the AI is making the best choices for patients.

15. Innovations in ‘Black Box Deep’ Learning Transparency

Innovations in ‘Black Box Deep’ learning transparency are making it easier to understand how AI models work. Researchers are developing new methods and tools to shed light on the inner workings of these complex models. These innovations aim to make AI more understandable and trustworthy.

One innovation is the development of explainable AI (XAI) tools. These tools provide insights into how ‘Black Box Deep’ models make decisions. They can show which features of the data are most influential and how the model processes them. This helps users get a better understanding of the AI’s decision-making process.

Another approach is to simplify the models themselves. By reducing the complexity of ‘Black Box Deep’ models, researchers can make them more interpretable. This might involve using fewer layers or simpler algorithms. These innovations are helping to make ‘Black Box Deep’ learning more transparent and accessible.

16. The Future of ‘Black Box Deep’ AI Ethics

The future of ‘Black Box Deep’ AI ethics is an important topic as AI becomes more integrated into our lives. Ethical considerations include ensuring that AI systems are fair, transparent, and accountable. As ‘Black Box Deep’ learning models become more powerful, addressing these ethical issues is crucial.

One ethical concern is the potential for bias in ‘Black Box Deep’ models. If the data used to train the AI is biased, the model’s decisions can also be biased. Ensuring fairness and avoiding discrimination are key ethical challenges that need to be addressed.

Another concern is accountability. If a ‘Black Box Deep’ model makes a mistake, it can be difficult to determine who is responsible. Developing ethical guidelines and frameworks for AI use can help address these issues and ensure that AI systems are used responsibly and fairly.

17. Practical Tips for Working with ‘Black Box Deep’ Models

Working with ‘Black Box Deep’ models can be challenging, but there are practical tips to make it easier. One tip is to use visualization tools that can help you see how the model is making decisions. These tools can provide a clearer picture of the AI’s inner workings.

Another tip is to regularly test and validate the model’s performance. By evaluating how the model performs on different types of data, you can ensure that it’s making accurate and reliable decisions. This helps catch any issues early and improves the model’s overall performance.

Finally, collaborate with others who have experience in ‘Black Box Deep’ learning. Working with experts can provide valuable insights and help you navigate the complexities of these models. Sharing knowledge and resources can make working with ‘Black Box Deep’ models more manageable and effective.

18. The Impact of ‘Black Box Deep’ Learning on Privacy

‘Black Box Deep’ learning can impact privacy in several ways. AI models often use large amounts of data, which can include personal information. Understanding how these models handle data is important for protecting privacy and ensuring that personal information is used responsibly.

One privacy concern is how data is collected and used. If personal information is used to train ‘Black Box Deep’ models, it’s essential to ensure that it is handled securely. This means protecting data from unauthorized access and ensuring that it is used only for its intended purpose.

Another concern is how data is shared. If the AI model is used by multiple organizations, it’s important to have clear policies on data sharing and usage. Ensuring that data is used ethically and responsibly is crucial for maintaining privacy and trust.

Conclusion

Understanding ‘Black Box Deep’ models is important because it helps us see how these complex AI systems work. Even though they are powerful, knowing how they make decisions can make them more trustworthy and useful. By learning more about these models, we can make sure they are fair and accurate in their predictions.

In the future, we hope to see more tools and methods that make ‘Black Box Deep’ models easier to understand. This will help us use AI safely and confidently. By working on transparency and human oversight, we can ensure that these advanced technologies benefit everyone while reducing any risks.